In today’s data-driven world, companies face the daunting task of extracting valuable insights from their vast amounts of data. The use of AI opens up completely new avenues. But when it comes to precisely analyzing a large number of documents, conventional systems such as ChatGPT reach their limits. This is where Tucan.ai comes in, a pioneer in the use of the innovative chunking approach for precise and efficient data analysis. This blog post introduces you to the challenges organizations face when using traditional AI systems and introduces Tucan.ai, which is changing the landscape of AI-powered knowledge management.

Download AI knowledge management one-pager

Table of Contents

ToggleTable of contents

Problems with common AI systems such as ChatGPT

Given the growing need for companies to use their large amounts of data for intelligent knowledge management, the connection to common AI systems such as ChatGPT has opened up promising prospects. However, this approach poses a number of significant challenges that must not be ignored when planning and implementing such systems.

Risk of AI hallucinations

When connecting company data and databases to common AI systems, such as ChatGPT, there is a risk of so-called AI hallucinations. This means that artificial intelligence generates answers that are not based on real, company-specific data. This erroneous or misleading information can have a significant impact, especially in scenarios where accurate and reliable data is critical for decision-making processes. Companies are therefore faced with the challenge of continuously monitoring and validating the output of such systems in order to avoid incorrect information.

Missing source references

Another shortcoming in the use of common AI systems for knowledge management in companies is the lack of source references in the generated answers. This limitation means that the information provided by the system is difficult to trace and check for accuracy. In environments where data accuracy and reliability are critical, this makes it much more difficult to use such systems to make informed business decisions.

Limitation based on tokens

Common AI systems are subject to limitations in the number of tokens they can process, which leads to problems when connecting to company databases for knowledge management purposes. The token limitation prevents the AI from analyzing and efficiently processing extensive company data in one go. For companies that need to analyze large volumes of text data, this limitation can significantly reduce the usability and efficiency of the AI system.

Data protection in terms of the GDPR

The integration of common AI systems into a company’s data infrastructure raises data protection concerns, especially in accordance with the GDPR. Many of these systems train on extensive databases that may contain potentially personal information without the clear consent of the individuals concerned. For companies, this means a legal risk if their AI application does not meet the strict data protection requirements. This requires additional resources to ensure GDPR compliance, including a transparent explanation of how and what data is processed.

Download AI knowledge management one-pager

Tucan.ai: An innovative solution, Made In Germany

With its technology developed in Germany, Tucan.ai represents a pioneering approach in the field of AI-supported knowledge management. By using advanced algorithms for chunking, Tucan.ai transforms the way companies access and analyze their data. This precision-oriented approach enables efficient segmentation of text data into thematically relevant sections, which are integrated into vector databases for transparent and verifiable analysis. Tucan.ai sets new standards for data analysis by focusing on precision, efficiency and data protection.

What is chunking?

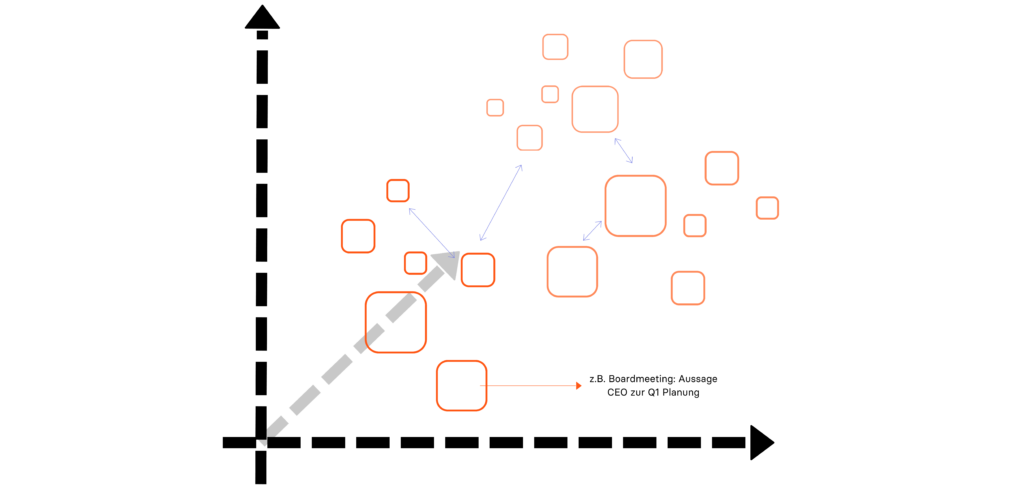

At the heart of advanced knowledge management is the concept of chunking, an approach that is increasingly being considered by companies to improve the efficiency and precision of data analysis using AI. Chunking refers to the process of dividing large amounts of text data or information into smaller, thematically relevant units, also known as “chunks”. This technique makes it possible to reduce the volume and complexity of data by using only the most relevant sections for analysis and processing.

Significance for businesses

For companies, the use of chunking in AI-based knowledge management systems can have several significant advantages. First of all, it enables targeted analysis of individual data blocks without the AI algorithm being overwhelmed by the wealth of irrelevant information. This not only leads to more precise and relevant results, but also improves the efficiency of data processing. In addition, this approach allows companies to ensure that their AI systems focus on the really important data, which is particularly important when processing sensitive or confidential information.

Increased efficiency in data processing

By dividing data into smaller, thematically coherent blocks, chunking enables targeted processing and analysis. This prevents the system from being overwhelmed by the sheer volume of information, which is particularly important when processing complex or multi-layered data sets. The ability to analyze specific data sections precisely and quickly leads to a significant increase in efficiency in knowledge management.

Improved accuracy and relevance of insights

One of the core problems of large data sets is the identification of relevant information. The principle of chunking enables AI systems to analyze precisely those data blocks that are relevant to a specific query or problem. This leads to more precise answers and insights, as irrelevant information is systematically filtered out. For companies, this means that they can rely on the insights generated to make informed decisions.

Simplifying complex data analysis

The ability to divide large and complex data sets into manageable units simplifies data analysis considerably. This structure makes it possible to carry out in-depth analyses without getting lost in the wealth of data. For fields such as market research, customer analytics or contract analysis, where detailed and specified insights are required, chunking offers a clear advantage.

Improved data protection and compliance

The principle of chunking also makes it possible to control and restrict the processing and analysis of data. This can be particularly crucial with regard to data protection and compliance with regulations such as the GDPR. Companies can be more specific about what data should be analyzed, which minimizes the risk of processing sensitive information without proper authorization.

Download AI knowledge management one-pager

Chunks in vector databases: Enabling precise source references

The integration of thematic blocks or “chunks” in vector databases is an innovative approach that revolutionizes the way data storage and retrieval works, especially in the context of AI-supported knowledge management. But why is this integration so effective and how does it contribute to accurate source references?

The importance of vector databases

Vector databases store information as vectors, a format that is particularly suitable for analysis and processing by machine learning algorithms. This type of database is ideal for handling high-dimensional data and enables complex queries and analyses at high speed – a significant advantage when searching for specific information within huge data sets.

Connecting chunks and vector databases

By storing chunks as individual vectors in vector databases, AI systems can quickly and efficiently access exactly the data sections that are relevant to a query. This leads to a precise and fast assignment of source references. Each chunk can be provided with specific metadata, including source information, which enables traceable and verifiable data analysis.

Advantages for businesses

1. Increased transparency:

By explicitly assigning sources to the analyzed data segments, the origin of the information becomes transparent, which is crucial for compliance and building trust.

2. Enabling precise source references:

This makes it easier to validate and verify the insights generated by the AI, which is particularly important in areas where data accuracy is critical.

3. Improvement of data quality and analysis results:

The clear allocation of data to the corresponding sources minimizes confusion and errors that could arise from incorrect or misleading information.

4. Efficient data management:

The ability to access relevant information in a targeted manner without having to search through entire databases saves time and resources.

Download AI knowledge management one-pager

Real-world examples

The variety and breadth of potential applications for chunking in the corporate landscape is enormous. Below you will find two selected examples that merely scratch the surface of this multi-faceted approach and illustrate the transformative power that chunking can have for specific professional requirements.

Contract analyses for lawyers

An outstanding application example of chunking in the corporate environment can be found in legal practice. Lawyers are often faced with the challenge of analyzing extensive contractual documents in order to identify and understand specific clauses, conditions and obligations. By using chunking, contract documents can be divided into smaller units, each of which reflects specific aspects of the contract, such as liability provisions, termination rights or data protection clauses. This enables lawyers to quickly and efficiently access relevant sections and perform comprehensive contract analyses with unprecedented precision.

Analysis of extensive data sets for market researchers

For market researchers faced with the challenge of gaining detailed insights from vast amounts of market data, chunking offers an effective solution. By dividing the data into thematically relevant chunks, the AI can search specifically for trends, patterns and consumer behavior in different segments of the market. For example, data streams from social media, customer feedback and purchase histories can be divided into separate chunks to address specific questions regarding product preferences or buyer demographics. This targeted analysis strategy enables market researchers to make more precise predictions and support well-founded decisions.

Efficiently manage information floods with AI

In an age of information overload, it is more important than ever for companies not only to manage their data, but also to use it intelligently. With its chunking technology developed in Germany and integration into vector databases, Tucan.ai offers a pioneering solution that prioritizes precision, efficiency and data protection. Whether it’s analyzing complex contracts, identifying market trends or making privacy-compliant decisions, Tucan.ai enables companies to revolutionize their data processing and make informed decisions based on verifiable and accurate data. Discover the transformative power of Tucan.ai and ensure your organization is at the forefront of data-driven decision making.